MVP Development Guide 2026: Process, Costs, and Real Examples

Want to know more? — Subscribe

MVP development in 2026 means building the smallest possible product that proves value and uses AI to accelerate validation, automate repetitive engineering, and forecast user behavior. AI-assisted design, LLMs for research and code generation, and composable, API-first architectures let teams move from idea → validated learning far faster than before.

Why it matters: VCs and corporate innovation teams now expect measurable signals (retention, engagement, early revenue) — not slides. With global startup failure rates still high (many analyses show ~80–90% long-term failure or a ~50% five-year survival band depending on source), a validated MVP is often the difference between follow-on funding and shutdown.

How AI changed the process: AI copilots and generative models reduce boilerplate, speed user-research synthesis, generate wireframes and tests, and create working code stubs — in practice, teams report meaningful task speedups (some experiments show 30–60% faster task completion in coding tasks; enterprise rollouts report similar gains). That shortens MVP cycles and reduces early engineering cost.

High-level costs & timelines, expect:

- simple MVP $30k–$55k (5–8 weeks);

- standard SaaS $55k–$140k (8–14 weeks);

- AI-powered MVP $140k–$300k+ (3–6 months).

These ranges assume professional teams, basic infra, and essential analytics. (Detailed pricing below.)

| Aspect | Simple MVP | Standard SaaS MVP | AI-Powered MVP |

|---|---|---|---|

| Core Focus | Basic user flow (e.g., login + 1 feature) | Multi-user dashboard + integrations | Predictive modeling + automation |

| Timeline | 5–8 weeks | 8–14 weeks | 10–16 weeks |

| Cost Range | $30K–$55K | $55K–$140K | $140K–$300K+ |

| Tech Stack | React / Node.js + AWS | Flutter / Python + GCP | LLMs (Claude / Llama) + Serverless |

| Key Metric | User sign-ups (target: 100+) | Retention (30% Week 1) | Engagement score (AI-driven: 40% uplift) |

| Validation Tool | Landing page MVP | Beta cohort testing | AI-simulated feedback |

|

|||

What Is an MVP in 2026?

An MVP is the simplest version of your product that real customers can use. It has just enough features to solve the main problem, without any unnecessary extras. The goal is to test if people actually want your product before you spend time and money building features they might not need.

Think of it like this: If you want to start a taxi service, your MVP isn't a fancy app with ratings, rewards programs, and multiple payment options. It's a basic app that lets people request a ride and pay for it. That's what Uber did at first.

How Is 2026 Different?

In 2026, AI helps you build MVPs faster and smarter:

- AI tools can write code for you

- AI analyzes customer feedback automatically

- AI predicts which features users will actually use

- AI enables you to design screens and user flows

This means you can test your idea much faster and cheaper than before.

MVP vs Other Product Types

People often confuse MVPs with prototypes and other concepts. Here's the difference:

| Type | What It Is | Time Needed | Cost | Best For |

|---|---|---|---|---|

| MVP | Real working product that customers can use and buy | 5–24 weeks | $30,000–$300,000+ | Testing if people will actually pay for your idea |

| Prototype | Visual demo that looks real but doesn't actually work | 1–4 weeks | $5,000–$25,000 | Showing your idea to investors or teammates |

| POC (Proof of Concept) | Basic test to see if your technology works | 2–8 weeks | $10,000–$50,000 | Testing if your technical approach is possible |

| MLP (Minimum Lovable Product) | MVP with better design and user experience | 8–20 weeks | $60,000–$200,000 | Entering markets where design matters a lot |

| Concierge MVP | You manually do the work to test if people want it | 1–6 weeks | $2,000–$15,000 | Testing demand before building anything |

| Micro-MVP | The absolute smallest version to test one thing | 2–6 weeks | $15,000–$50,000 | Testing one specific assumption very quickly |

|

||||

The key point: Only an MVP gives you real data from real customers using a real product. Prototypes look nice, but don't prove that anyone will buy. MVPs prove there's a market.

Why You Need an MVP in 2026

Speed Wins

The market moves fast now. Companies that launch working products in 3-6 months win. Companies that spend 2 years building the "perfect" product lose because competitors get there first.

With AI tools, building products is faster than ever. Your competitors are using these tools too. If you don't move quickly, someone else will build your idea first.

Investors Want Proof

Investors used to fund ideas. Now they have proof. They want to see:

- Real users are testing your product

- Data showing people come back and use it

- Evidence that people will pay for it

Without an MVP, raising money takes 4-5 times longer. With an MVP showing real traction, investors pay attention.

A study of startups from 2024-2025 found that 67% of failures happened because they built products nobody wanted. They didn't fail because of bad code or technical problems—they failed because they didn't test if people actually wanted what they were building.

Competition Is Intense

Every market is crowded now:

- 40+ new health tech products launch every month

- 100+ new fintech companies start every quarter

- Even small niche markets have 10-20 competitors

In this environment, you can't afford to guess. You need to test quickly, learn fast, and change direction based on real feedback. That's what MVPs let you do.

Building a full product without testing costs an average of $800,000. And 72% of the time, that product fails. Building an MVP first costs $30,000-$300,000 and helps you avoid wasting the other $500,000+ if your idea doesn't work.

AI Makes MVPs Smarter

AI tools in 2026 help you make better decisions:

- They predict which users will stop using your product

- They identify which features people actually use

- They analyze feedback from thousands of users automatically

Startups using AI during their MVP phase are 40% more likely to find product-market fit and iterate 60% faster than startups doing everything manually.

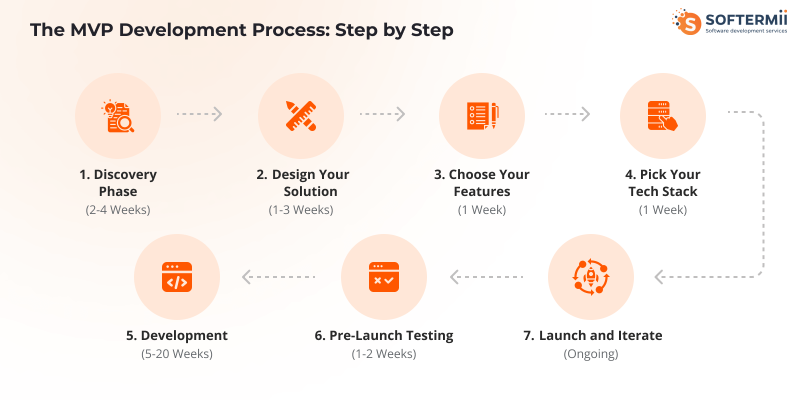

The MVP Development Process: Step by Step

Step 1: Discovery Phase (2-4 Weeks)

This is where you determine whether your idea solves a real problem. Don't skip this step—it saves you from building something nobody wants.

What you do:

Research the market with AI: Use AI tools to analyze thousands of customer reviews, social media posts, and forum discussions. Look for patterns in what people complain about. If 100 people mention the same problem, that's a real problem worth solving.

For example, if you're building a project management tool, AI can scan through reviews of Asana, Monday.com, and Trello to find what users hate most. Maybe they all say "it's too complicated" or "it doesn't integrate with our other tools."

Talk to real people: Interview at least 30 people in your target market. Ask them about their problems, not about your solution. You want to hear them describe the problem in their own words. If 70% of people describe the same problem the same way, you've found something real.

Bad question: "Would you use an app that does X?" Good question: "Tell me about the last time you struggled with X."

Analyze competitors: Look at 20-50 competitors (AI can help you find them quickly). Study:

- What features do they offer

- What customers complain about in reviews

- What's missing that people keep asking for

The gaps are your opportunities.

What you get:

- A clear problem statement that real people confirmed

- A description of who has this problem (your target customer)

- A list of competitors and how you're different

- Proof that people will pay to solve this problem

Cost: $5,000-$15,000 Time: 2-4 weeks

Step 2: Design Your Solution (1-3 Weeks)

Now you design exactly what your MVP will do and how it will work.

Define your value: Write one sentence that explains how your product solves the problem. Be specific about the outcome.

Weak: "AI-powered project management."

Strong: "Cut project setup time from 4 hours to 15 minutes with AI templates."

Map the core user journey: List the 3-5 main things a user must do to get value from your product. For a food delivery app, that's:

- Browse restaurants

- Order food

- Pay

- Track delivery

- Rate the order

Everything else (filters, favorites, loyalty programs, dietary preferences) can wait. Those are nice-to-have features.

Create wireframes: Draw simple sketches of each screen. In 2026, AI design tools can generate these for you in minutes. You give them the user flow, and they create basic screen layouts. You then refine them.

Don't design every possible scenario. Focus on the "happy path"—when everything goes right. You'll handle errors and edge cases later.

What you get:

- Detailed wireframes showing every screen

- A clickable prototype that users can test

- A basic design system (colors, fonts, buttons)

Cost: $8,000-$25,000 Time: 1-3 weeks

Step 3: Choose Your Features (1 Week)

This is the hardest part: deciding what to build and what to skip. Most MVPs fail because they try to build too much.

Use the MoSCoW method:

- Must Have: Features your product can't work without. If you remove it, the product becomes useless.

- Should Have: Features that improve the experience, but users can work around.

- Could Have: Nice additions if you have time and money.

- Won't Have: Features you deliberately won't build now.

For an MVP, you only build must-haves plus maybe 1-2 Should Haves that make you different from competitors.

Example - Food Delivery MVP:

Must Have:

- Restaurant list

- Menu display

- Cart and checkout

- Order tracking

- Payment processing

Should Have:

- Restaurant ratings

- Estimated delivery time

- Order history

Could Have:

- Favorites list

- Dietary filters

- Scheduled orders

Won't Have (for now):

- Loyalty program

- Group ordering

- Restaurant reservation system

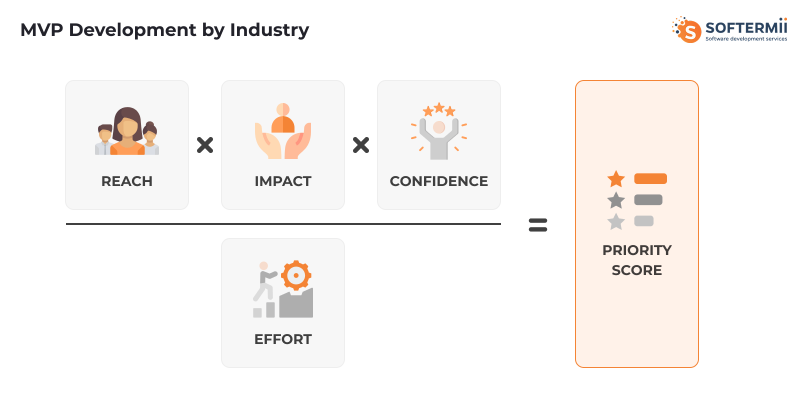

Use RICE scoring for data: For each feature, calculate:

- Reach: How many users will use it per month?

- Impact: How much does it help them? (score 0.25 to 3)

- Confidence: How sure are you? (percent)

- Effort: How many weeks to build?

Build the highest-scoring features first.

What you get:

- A locked feature list for your MVP

- Everything else is saved for version 2.0

Cost: Included in design phase

Time: 1 week

Step 4: Pick Your Tech Stack (1 Week)

Choose the technologies you'll use to build your MVP. In 2026, the right choice balances speed, cost, and future scalability.

| Category | Option | Description |

|---|---|---|

| Frontend | React | Most popular, huge community, lots of libraries |

| Next.js | React-based, great for fast, SEO-friendly websites | |

| Flutter | Build for web, iOS, and Android with one codebase | |

| Backend | Node.js | Fast, JavaScript-based, good for real-time features |

| Python | Great if you're using AI/ML features | |

| Ruby on Rails | Fast development, good for standard web apps | |

| Database | PostgreSQL | Reliable, handles complex data |

| MongoDB | Flexible, good for changing requirements | |

| Firebase | Fast setup, handles hosting and authentication too | |

| AI Integration | OpenAI API | For GPT models |

| Anthropic API | For Claude | |

| Hugging Face | For open-source models | |

| Cloud Hosting | AWS | Most features, most complex |

| Google Cloud (GCP) | Good AI tools built in | |

| Azure | Good for enterprise clients | |

| Vercel / Netlify | Easiest for simple apps | |

|

||

Key decisions:

Monolith vs Microservices: For MVPs, start with a monolith (everything in one application). It's simpler and faster. You can break it into microservices later if needed.

Serverless vs Traditional: Serverless (AWS Lambda, Google Cloud Functions) costs less when you have a few users. Traditional servers cost more upfront but can be cheaper at scale.

What you get:

- Complete tech stack documentation

- Architecture diagram

- Development environment setup

Cost: Included in planning

Time: 1 week

Step 5: Development (5-20 Weeks)

This is where you actually build the product. In 2026, AI coding assistants can write 40-60% of your code, but you still need experienced developers to guide them and handle complex logic.

Sprint planning: Break the work into 1-2 week sprints. Each sprint should deliver working features you can test.

Frontend development: Build the user interface—everything users see and interact with. This includes:

- All screens from your wireframes

- Forms and buttons

- Responsive design (works on phone, tablet, desktop)

- Loading states and basic error messages

Backend development: Build the server logic:

- User authentication (signup, login)

- Database operations (save, retrieve, update data)

- Business logic (how your product actually works)

- API endpoints (how frontend talks to backend)

AI/ML integration: If your product uses AI:

- Connect to AI APIs (OpenAI, Anthropic, etc.)

- Build prompts and logic for AI features

- Handle AI response processing

- Set up fallbacks if AI fails

Database setup: Structure your data properly from the start. Poor database design causes problems later that are expensive to fix.

Testing: Test as you build, not just at the end. This includes:

- Unit tests (individual functions work)

- Integration tests (different parts work together)

- User testing (real people can actually use it)

Security basics: Even MVPs need basic security:

- Encrypt passwords

- Use HTTPS

- Validate user inputs

- Protect against common attacks

What you get:

- Working product you can use

- Code deployed to servers

- Basic admin panel to manage data

Cost: $20,000-$250,000 (varies widely)

Time: 5-20 weeks, depending on complexity

Step 6: Pre-Launch Testing (1-2 Weeks)

Before you launch publicly, test with a small group to catch major issues.

Usability testing: Watch 5-10 people use your product. Don't tell them what to do—just give them a goal and watch where they get confused.

Micro-cohort testing: Give access to 20-50 early users. Track:

- Do they complete the core actions?

- Do they come back?

- Where do they get stuck?

Analytics setup: Install analytics tools:

- Google Analytics 4 (GA4): Overall traffic and user behavior

- Mixpanel: Detailed user action tracking

- Hotjar: See recordings of users actually using your product

Bug fixing: Fix critical bugs that prevent core features from working. Don't try to fix every small issue—you'll fix those based on user feedback after launch.

What you get:

- A product that works for your core use cases

- Analytics tracks everything important

- Confidence you won't crash immediately after launch

Cost: $3,000-$10,000 Time: 1-2 weeks

Step 7: Launch and Iterate (Ongoing)

Launch means making your product available to real users, not making it perfect.

Soft launch: Start with a small group (50-200 users) before going fully public. This lets you:

- Fix issues without embarrassing yourself publicly

- Gather initial feedback

- Refine your messaging

Track key metrics (KPIs):

- Activation rate: What % of signups complete the core action?

- Retention: How many users come back after 1 day, 7 days, 30 days?

- Engagement: How often do active users use your product?

- Revenue: If you're charging, what's the conversion rate?

Collect feedback: Talk to users constantly. The best feedback comes from:

- Watching them use your product

- In-app surveys at key moments

- One-on-one interviews with active users

- Support requests (they tell you what's broken or missing)

Iterate fast: Use what you learn to improve the product. Plan 2-week iteration cycles:

- Week 1: Analyze data, talk to users, plan changes

- Week 2: Build and deploy improvements

When to scale beyond MVP:

You're ready to build more when you see:

- 40%+ of users coming back weekly (good retention)

- Clear patterns in feature requests (users want the same things)

- Revenue or strong growth signals (if relevant)

- You've validated the core value proposition

What you get:

- Real users using your product

- Data showing what works and what doesn't

- A clear direction for version 2.0

Cost: $5,000-$20,000/month for improvements and maintenance

Time: Ongoing

MVP Development Pricing in 2026

MVP pricing 2026 hinges on complexity, AI integration, and region, with global averages up 15% from 2025 due to talent shortages. The cost to build an MVP in 2026 starts low for basics but escalates with custom AI.

Cost Ranges

- Simple MVP: $30,000–$55,000 (e.g., landing page + basic CRUD).

- Standard SaaS MVP: $55,000–$140,000 (multi-tenant dashboard).

- AI-Powered MVP: $140,000–$300,000+ (LLM integrations, predictive features).

- Enterprise MVP: $200,000–$500,000+ (compliance-heavy, e.g., healthcare/fintech).

Cost by Component

| Component | Description | Cost Range | % of Total |

|---|---|---|---|

| Discovery | AI research + validation | $5K–$15K | 10% |

| UI/UX | Generative wireframes + prototypes | $8K–$20K | 15% |

| Frontend | React/Flutter build | $15K–$40K | 20% |

| Backend | Node.js/Python APIs | $20K–$50K | 25% |

| Database | PostgreSQL setup | $5K–$15K | 8% |

| Integrations | Stripe, Mixpanel | $5K–$20K | 10% |

| AI Modules | LLM embedding (Claude/Llama) | $20K–$80K | 15% (AI MVPs) |

| DevOps | AWS/GCP deployment | $5K–$15K | 7% |

| QA | Automated + manual testing | $8K–$20K | 10% |

| PM | Agile oversight | $10K–$25K | 10% |

| Maintenance | Post-launch (20% of dev cost/year) | $6K–$60K | Ongoing |

|

|||

Timeline (2026 Norms)

- Simple MVP: 5–8 weeks (e.g., micro-MVP validation).

- Mid-Level MVP: 8–14 weeks (SaaS with integrations).

- Complex MVP: 3–6 months (AI + enterprise compliance).

Cost by Region (2026)

- US/UK: $100–$200/hr; total $100K+ (high expertise, premium).

- Eastern Europe: $50–$80/hr; total $40K–$100K (balanced quality/cost).

- LATAM: $40–$70/hr; total $30K–$80K (agile, English-fluent).

- Asia: $30–$60/hr; total $25K–$70K (speedy, scalable).

MVP Development Trends for 2026

This is a solid overview of emerging MVP development trends heading into 2026. Let me add some context and considerations for each:

AI-First MVP Development

The autonomous coding shift is real—tools like Cursor, v0, and GitHub Copilot are already generating production-ready components. The key challenge will be maintaining code quality and architectural consistency as AI generates more of the codebase. Teams that succeed will focus on prompt engineering as a core skill and establish clear guardrails for AI-generated code.

Micro-MVPs

The 2–6 week launch window is becoming the new standard, but there's a risk: validation quality over speed. The faster you ship, the more critical it becomes to have sharp hypotheses and meaningful success metrics. Micro-MVPs work best when testing one core assumption at a time.

AI-native Product Concepts

Multi-agent systems and domain-specific copilots are where the real product differentiation will happen. Generic AI features are already commoditized—the value is in vertical-specific training and workflow integration. Think "AI that understands our users' domain language" rather than "we added ChatGPT."

No-Code + Pro-Code Hybrid

The 40–60% speed gain is achievable, but the maintainability claim needs scrutiny. The winning pattern seems to be: no-code for UI/workflows, pro-code for business logic and integrations. This keeps the escape hatches open when you need custom functionality.

Composable Architecture

API-first and modular thinking isn't new, but it's becoming non-negotiable. The trend is toward smaller, interchangeable components rather than monolithic platforms. This aligns well with the need to pivot quickly based on MVP learnings.

Predictive User Modeling

This is powerful but often over-engineered in early stages. For true MVPs, start with basic cohort analysis and A/B testing before investing in sophisticated prediction models. The data quality from your first users often isn't robust enough for meaningful forecasting anyway.

The meta-trend: MVPs in 2026 will be less about "build fast" and more about "learn fast." The bottleneck is shifting from development speed to insight generation and decision-making velocity.

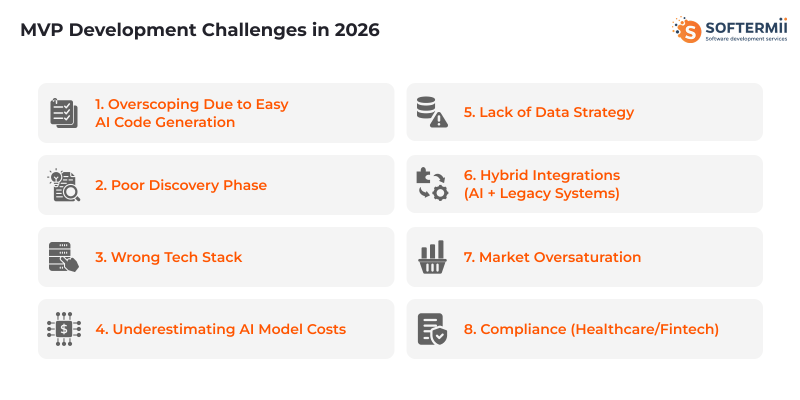

MVP Development Challenges in 2026

2026 amplifies MVP challenges amid AI hype, but structured solutions mitigate risks.

1. Overscoping Due to Easy AI Code Generation

Why It Happens

AI coding tools make feature development

feel effortless, creating the illusion that building more equals better

validation. Teams confuse "can build quickly" with "should build now."

Impact

- Bloated MVPs that test multiple hypotheses simultaneously

- Unclear signal on what actually drives user value

- Extended time-to-market despite faster coding

- Technical debt from hastily AI-generated features

2026-Specific Solution

Implement

AI-assisted scope audits: Use LLMs to analyze your feature

list against your core hypothesis, automatically flagging scope creep. Adopt

"feature freeze prompts" that force teams to justify each addition against a

single success metric before AI generates any code.

2. Poor Discovery Phase

Why It Happens

Pressure to leverage AI speed advantages

leads teams to skip or rush user research. "We'll just ship and iterate"

becomes the default, bypassing validation of problem-solution fit.

Impact

- Building solutions for assumed problems

- High pivot costs despite fast development

- Wasted AI compute on the wrong features

- User acquisition fails because the product doesn't resonate

2026-Specific Solution

Deploy

AI-powered discovery workflows: Use multi-agent systems for

rapid user interview analysis, sentiment clustering, and pain point

identification. Tools like synthetic user personas (trained on real interview

data) can stress-test assumptions in hours, not weeks. Budget 30% of sprint

zero for AI-augmented discovery.

3. Wrong Tech Stack

Why It Happens

Choosing based on AI tool compatibility

rather than product needs. Teams pick stacks that work well with current

coding assistants but don't align with long-term scalability or team

expertise.

Impact

- Vendor lock-in to AI tooling ecosystems

- Performance issues when scaling beyond MVP

- Team velocity drops when AI can't help with edge cases

- Migration costs multiply if a pivot is needed

2026-Specific Solution

Use

stack decision frameworks that prioritize exit strategies:

Select technologies based on "AI-assisted + manual maintainability" ratio.

Favor stacks with strong community support and multiple AI tool integrations

(not just one). Run "AI failure drills"—can your team maintain/extend

code if the AI tool disappears?

4. Underestimating AI Model Costs

Why It Happens

Development-phase costs are negligible,

masking production-scale expenses. Teams don't model costs at 10x, 100x, or

1000x user volume. Free tiers and beta pricing create false baselines.

Impact

- Unit economics break at scale

- Forced architecture rewrites mid-growth

- Emergency cost optimization disrupts the roadmap

- Inability to offer freemium or low-price tiers.

2026-Specific Solution

Implement

cost-per-user tracking from day one: Build observability that

ties every AI API call to user actions and revenue. Use tiered model

strategies (GPT-4 for complex tasks, GPT-4o-mini for simple ones). Set hard

budget alerts at 3x expected usage. Consider fine-tuned smaller models or edge

inference for predictable workflows.

5. Lack of Data Strategy

Why It Happens

Focus on shipping features overshadows

data collection planning. Teams assume they can "add analytics later" or that

AI will automatically extract insights from unstructured data.

Impact

- Can't prove product-market fit with data

- Regulatory compliance gaps discovered late

- Inability to train custom models on user data

- Missing context for AI personalization features

2026-Specific Solution

Adopt

data contracts at feature design: Before any feature build,

define what data it generates, where it's stored, retention policies, and how

it feeds learning loops. Use AI to auto-generate schema documentation and

compliance checks. Implement event-driven architectures that make every user

action capturable by default.

6. Hybrid Integrations (AI + Legacy Systems)

Why It Happens

Enterprise customers or regulated

industries require connecting modern AI features to decades-old

infrastructure. Legacy APIs weren't designed for real-time AI data needs.

Authentication, data formats, and latency expectations clash.

Impact

- 60–80% of integration time spent on legacy compatibility

- AI features feel slow or unreliable to users

- Data sync issues create inconsistent experiences

- Security vulnerabilities at integration points

2026-Specific Solution

Build

intelligent middleware layers: Use AI-powered API translators

that handle schema mismatches and data transformation automatically. Implement

async patterns with smart caching—AI predictions run on cached data

while background sync handles legacy updates. Adopt "shadow mode" testing,

where AI runs in parallel with legacy without blocking user flows.

7. Market Oversaturation

Why It Happens

AI lowers barriers to

entry—thousands of similar MVPs launch simultaneously. Low

differentiation because everyone uses the same foundation models and UI

patterns. "ChatGPT wrapper" syndrome.

Impact

- CAC spikes as competition floods channels

- Commoditization pressure before achieving PMF

- Investor fatigue with "yet another AI tool."

- Price compression makes unit economics harder

2026-Specific Solution

Focus on

vertical depth over horizontal breadth: Build for specific

workflows in defined industries where domain expertise creates moats. Invest

in proprietary data loops (your AI gets smarter from user data competitors

can't access). Differentiate on outcomes, not features—"reduces hospital

readmissions by 30%" beats "AI-powered health assistant."

8. Compliance (Healthcare/Fintech)

Why It Happens

AI introduces new regulatory complexity:

model explainability requirements, data residency for training data, and

liability for AI decisions. Regulations like the EU AI Act, HIPAA for AI, and

financial services AI auditing are still evolving.

Impact

- 6–12 month delays for compliance certification

- Architecture changes required for audit trails

- Restricted model choices (can't use blackbox LLMs)

- Geographic launch restrictions

2026-Specific Solution

Implement

compliance-by-design with AI auditing: Use specialized LLMs

trained on regulatory frameworks to review architecture decisions in

real-time. Build explainability layers from day one (LIME/SHAP for

predictions). Choose AI vendors with SOC 2, HIPAA BAAs, and regional data

hosting. Consider hybrid approaches: rule-based systems for regulated

decisions, AI for recommendations only. Partner with legal-tech AI platforms

that provide pre-built compliance templates for common use cases.

MVP Opportunities in 2026: Why This Year Is Exceptional

2026 represents a unique inflection point where technological capability, market readiness, and economic pressure converge. For the first time, founders can build enterprise-grade products at consumer-app speed, while investors demand proof over promises.

1. AI Democratization: The Great Equalizer

The cost of AI capabilities has dropped 94% since 2020 (Stanford AI Index 2024). What required $500K+ engineering teams in 2023 now costs $5K in API credits. More critically, model performance has plateaued—GPT-4, Claude Sonnet, and Gemini are functionally equivalent for most use cases, eliminating "AI advantage" as a moat. The battleground has shifted to application-layer innovation.

Key Stats:

- OpenAI's API pricing: 97% cheaper per token than GPT-3 launch (2020 vs. 2025)

- Developer velocity: GitHub reports developers using AI assistants ship features 55% faster with 40% fewer bugs

- No-code AI platforms: Projected 230M users by the end of 2025 (Gartner), vs. 28M software developers globally

- Fine-tuning costs: Down from $100K+ to $500-3K for domain-specific models

What This Means for MVPs:

A solo founder with $10K can now build what previously required venture-backed teams. The constraint is no longer "can we build this?" but "should we build this?" This shifts competitive advantage to domain expertise, distribution, and speed of learning—all areas where scrappy MVP builders excel over incumbents.

2. Investor Demand for Validated Products

The 2021-2022 era of "fund the vision" is dead. Post-2023 correction, median seed rounds dropped 32% in size while investor expectations for traction increased. In 2026, pre-product raises are nearly extinct outside top-tier founder repeat plays.

Key Stats:

- Bessemer's 2025 State of the Cloud report shows 78% of B2B seed deals now require $10K+ MRR or 1,000+ engaged users.

- Average time from founding to seed round increased from 8 months (2021) to 19 months (2025).

- YC acceptance rate dropped to 1.5% in 2025, with accepted companies showing 30% higher pre-application traction than 2022 cohorts.

What This Creates:

A validation economy—founders who can de-risk with lean MVPs command better terms and higher valuations. Investors will pay premium multiples for proven product-market fit over speculative potential. This rewards builders who master rapid experimentation.

The Opportunity:

Companies that bootstrap to $50-100K

ARR before raising can command 2-3x higher valuations than those raising at

the idea stage. MVPs aren't just product development—they're valuation

engineering.

3. Industry-Specific Opportunities

Healthcare: Post-Pandemic Digital Acceleration Meets AI

Why Now:

- Telehealth adoption stabilized at 38x pre-pandemic levels—the behavior change is permanent.

- CMS approved reimbursement codes for 14 new digital health categories in 2025, making AI-driven diagnostics billable

- Administrative burden crisis: Physicians spend 49% of work hours on EHR/admin tasks, creating a massive efficiency opportunity

Market Size:

Global digital health market projected at

$656B by 2026, growing 27.7% CAGR

MVP Sweet Spots:

- Clinical workflow automation: AI scribes for documentation (Epic's Suki integration shows 70% time savings)

- Prior authorization automation: $31B annual waste in admin time

- Remote patient monitoring: Medicare now covers RPM for 200+ conditions

- Mental health triage: 160M Americans in therapy deserts.

Why MVPs Win Here:

Incumbents (Epic, Cerner) move slowly

due to compliance overhead. Focused MVPs solving one workflow problem can

capture hospital departments before enterprise sales cycles even start.

Fintech: Regulatory Clarity + Embedded Finance

Why Now:

- EU's MiCA regulation (2024) and the UK's digital securities framework provide clarity for crypto/digital assets.

- The embedded finance market hit $138B in 2025, projected $248B by 2028—every SaaS wants payment/lending features.

- Open banking mandates: 72% of US banks now offer API access vs. 23% in 2021ю

Market Dynamics:

- Traditional banks spend 70% of their IT budgets on legacy maintenance, creating an innovation vacuum

- Unit economics shift: Payment processing margins compressed, but data monetization opportunities exploded

MVP Sweet Spots:

- Vertical-specific neobanks: Construction contractors, healthcare providers, franchisees

- Treasury management for SMBs: $2.3T sitting in non-interest accounts

- Crypto compliance infrastructure: Every exchange needs AML/KYC automation

- B2B payment orchestration: Managing 12+ payment rails is now table stakes

Real Example:

Column (banking infrastructure) reached

$40M ARR in 18 months by focusing exclusively on embedded banking for vertical

SaaS. Their MVP was live in 6 weeks.

Logistics: Post-Supply-Chain-Crisis Digitization

Why Now:

- 95% of supply chain leaders invested in digital tracking post-2021 disruptions, but tools remain fragmented

- Nearshoring boom: Mexico-US trade up 23% YoY, creating new routing complexity

- Driver shortage: 78,000 unfilled trucking positions in the US alone—efficiency tools critical

Market Size:

Global logistics tech market $70B in 2026,

17% CAGR through 2030

MVP Sweet Spots:

- Last-mile optimization: AI route planning (like what Onfleet did, now $100M+ ARR)

- Freight matching for specialized loads: Temperature-controlled, hazmat, oversize

- Warehouse labor management: Temporary staffing coordination (think Uber for forklift operators)

- Returns logistics: $816B in returned merchandise annually

Why MVPs Win:

Logistics operators will pay for

ROI-positive tools immediately. If your MVP saves 10% on fuel costs, you can

charge deal-by-deal before building a full platform.

Proptech: Commercial Real Estate Rebalancing

Why Now:

- Office vacancy rates stabilized at 19.2% nationally—"return to office" stalled, forcing asset repositioning

- $1.5T in commercial mortgages maturing 2024-2026 with refinancing crisis

- Residential: Build-to-rent market growing 23% annually as homeownership stalls

Market Dynamics:

- Distressed assets: Banks holding foreclosed commercial properties need disposition tools

- Adaptive reuse: Converting offices to residential—requires new design/permitting workflows

MVP Sweet Spots:

- Tenant experience platforms: Building apps for amenity booking, maintenance (HqO model)

- Energy efficiency retrofitting: $379B IRA funding for building upgrades

- Fractional ownership platforms: Commercial property syndication for accredited investors

- Construction project management: 85% of projects over budget—coordination tools desperately needed

Validation Insight:

Proptech has the highest

MVP-to-scale success rate (42% reach Series A) because customers will pilot

quickly if ROI is proven in one building.

EdTech: AI Tutoring Meets Credential Inflation

Why Now:

- 68% of jobs now require college degrees vs. 45% in 2000, but degree skepticism is at an all-time high

- Competency-based hiring: Google, Apple, and IBM dropped degree requirements for 50%+ roles

- Learning crisis: 40% of US 4th graders are below basic math proficiency post-pandemic

Market Size:

Global EdTech $342B in 2025, $605B by 2028

MVP Sweet Spots:

- AI tutoring with verified outcomes: Khan Academy's Khanmigo shows 34% improvement in test scores

- Micro-credentialing platforms: Skill certification in 2-6 weeks (bootcamp model but broader)

- Corporate upskilling: $380B annual spend, mostly wasted on ineffective training

- Special education tools: 7.5M US students with IEPs, mostly underserved

Why 2026 Specifically:

Parents who experienced pandemic

learning loss are willing to pay for proven interventions. B2B buyers want

measurable skill gains, not engagement metrics—perfect for lean MVPs

with tight feedback loops.

Manufacturing: Reshoring + Labor Shortage

Why Now:

- CHIPS Act deployed $52B for domestic semiconductor production—70+ new fabs breaking ground

- The manufacturing sector has 622,000 unfilled positions despite rising wages

- Sustainability pressure: EU Carbon Border Adjustment Mechanism (2026) taxes high-carbon imports

Market Dynamics: Factories are data-rich but insight-poor. Legacy MES/ERP systems don't expose data for AI analysis.

MVP Sweet Spots:

- Predictive maintenance: Computer vision for equipment monitoring (industrial IoT)

- Digital work instructions: AR/tablets replacing paper manuals (younger workforce expectation)

- Supply chain visibility: Track components across tier-2/3 suppliers

- Quality inspection automation: AI vision systems (99.7% accuracy now standard)

Real Example:

Tulip (manufacturing app platform) reached

$100M valuation by solving one problem: digitizing paper-based assembly

instructions. Started with a 2-week MVP in one factory.

AI Automation Tools: Picks and Shovels

Why Now: Every company is trying to implement AI, but 87% of AI projects fail to reach production (Gartner). The gap between "AI demo" and "AI in production" is massive—an infrastructure opportunity.

Market Size:

MLOps and AI infrastructure market $18B in

2025, $126B by 2030

MVP Sweet Spots:

- Prompt management platforms: Version control for LLM interactions (Humanloop model)

- AI observability: Track model costs, latency, drift (Arize, Weights & Biases opportunity)

- Evaluation frameworks: Automated testing for AI outputs

- Multi-agent orchestration: Tools to coordinate specialized AI agents

Why This Category Is Special:

You're selling to teams

already spending on AI—expansion revenue, not new budgets. If you can

prove 20% cost savings on existing OpenAI spend, you close deals in weeks.

4. Macro Trends Amplifying All Opportunities

1. The "API Economy" Maturity

77% of enterprises now offer public APIs vs. 32% in 2019. Every service is composable—your MVP can integrate best-in-class components instead of building from scratch.

2. Zero-Interest Rate Era Is Over

Efficiency matters again. Median burn multiple (cash burned per $ ARR) dropped from 2.3x (2021) to 0.8x (2025). Lean MVPs that prove unit economics early are competitively advantaged.

3. Regulatory Tailwinds

- Data portability: GDPR, CCPA force incumbents to expose data via APIs

- Right to repair: Opens access to previously closed industrial systems

- Open banking: Mandated financial data sharing

4. Generational Workforce Shift

Gen Z (27% of workforce by 2025) expects AI-powered tools—they won't tolerate legacy software. This creates "bring your own tool" pressure that MVPs can exploit.

5. Expert Predictions for 2026

Marc Andreessen (a16z):

"The advantage now goes to founders who can validate product-market fit in

90 days, not 9 months. AI didn't change what customers want—it changed

how fast you can test your hypotheses."

Sarah Guo (Conviction VC):

"We're investing in fewer companies at higher checks, but only ones that

have proven they can acquire customers profitably. The MVP isn't a prototype

anymore—it's your first repeatable sales motion."

Tomasz Tunguz (Theory Ventures):

"The cost to validate an idea dropped 95%. The cost of being wrong stayed

the same. That asymmetry is the opportunity—you can test 10x more

ideas with the same capital."

Real-World MVP Examples: From Concept to Validation

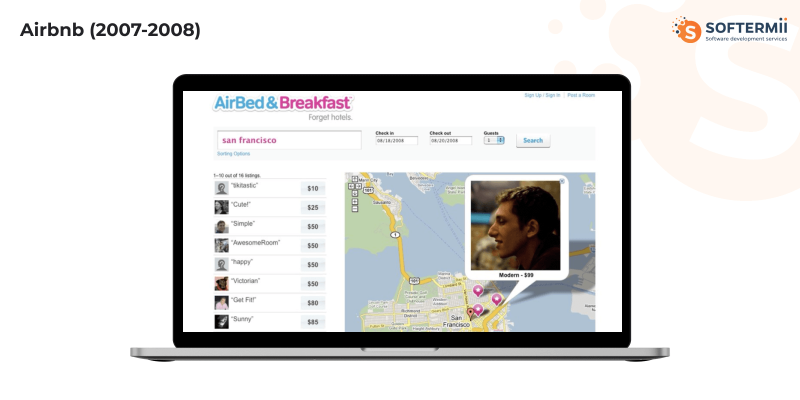

1. Airbnb (2007-2008)

Initial Idea

Help people rent out air mattresses in

their apartments to attendees of sold-out conferences. Original name: "AirBed

& Breakfast."

MVP Version

- Simple website with photos of their own San Francisco apartment

- Three air mattresses on the floor

- Homemade breakfast included

- Built in 2 weeks using basic web templates

- Launched for the 2008 Industrial Design Conference in SF

Cost/Time:

- Development cost: ~$1,000 (domain, basic hosting, template)

- Time to launch: 2 weeks

- First revenue: $240 from 3 guests in one weekend

Metrics Validated:

- ✓ People will pay to stay in strangers' homes (social proof barrier broken)

- ✓ Photos and personal descriptions drive bookings

- ✓ Conference attendees have acute lodging needs

- ✗ Air mattresses weren't the draw—location and price were

Key Lesson:

"Live your MVP." The founders hosted guests themselves,

experiencing every pain point firsthand. This led to insights no user

interview would reveal—like how critical professional photography was

(they later went door-to-door taking photos for hosts, which 2-3x'd bookings).

Pivot Moment:

After initial traction, they discovered

hosts wanted to rent entire apartments, not just air mattresses. They rebuilt

around this insight, but only after validating payment behavior with the

minimal version.

2. Dropbox (2007-2008)

Initial Idea

File synchronization service that "just

works" across devices without USB drives or emailing files to yourself.

MVP Version

- No actual product built initially—just a 3-minute explainer video

- Demo showed a fake interface syncing files across computers

- Posted to Hacker News and Digg

- Linked to the beta waitlist signup page

Cost/Time:

- Development cost: $0 for MVP (video only), ~$5K for working beta

- Time to video: 1 week to script/record

- Waitlist result: Grew from 5,000 to 75,000 signups overnight

Metrics Validated:

- ✓ Massive demand for seamless file sync (waitlist explosion)

- ✓ The technical audience understood the value proposition immediately

- ✓ Willingness to provide email for future access (early signal of intent)

- ✓ Video explanation was sufficient to communicate a complex technical product.

Key Lesson:

"Sell the problem, not the solution." Drew Houston validated

demand before writing sync algorithms. The video showed the

outcome (files magically syncing), not the technology. This

pre-validation saved months of building features nobody wanted.

Technical Insight:

Early beta focused solely on making

sync "invisible"—no folders to manage, no manual uploads. This

single-minded focus on core value prop (vs. competing on storage space or

features) became their sustainable moat.

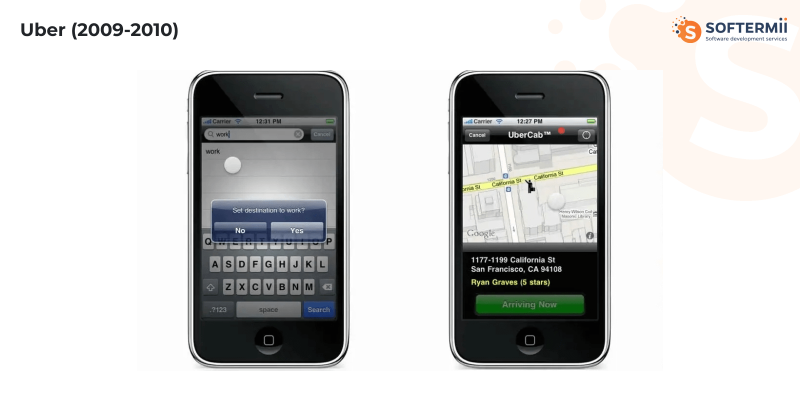

3. Uber (2009-2010)

Initial Idea

On-demand black car service you could

request via SMS, initially called "UberCab." Solving the founders' problem:

couldn't get a taxi in San Francisco.

MVP Version

- iPhone app (iOS only) for three co-founders and ~10 friends

- Manual dispatch: Requests went to Garrett Camp's phone, and he called drivers

- Started with luxury black cars only (simpler than taxi regulations)

- Launched exclusively in San Francisco

- Payment via pre-loaded accounts (no in-app transactions yet)

Cost/Time:

- Development cost: ~$25,000 (basic iOS app + backend)

- Time to launch: 8 months from idea to live MVP

- Initial fleet: 3 drivers with leased black cars

- First month: ~100 rides total

Metrics Validated:

- ✓ People will pre-pay for convenience (account model worked)

- ✓ 5-10 minute wait times acceptable for premium service

- ✓ Drivers preferred guaranteed rides over street hailing

- ✓ GPS tracking reduced "where's my car?" anxiety

- ✗ Black cars only limited addressable market (led to UberX)

Key Lesson:

"Start with a constrained market." By launching black cars

in SF only, they avoided complex taxi regulations and focused on experience.

The luxury positioning gave them margin to iterate before competing on price.

Only after proving unit economics did they expand to UberX and new cities.

Growth Hack:

Founders rode with every driver for the

first 6 months, gathering feedback in real-time. This "do things that don't

scale" approach built driver loyalty and caught UX issues immediately.

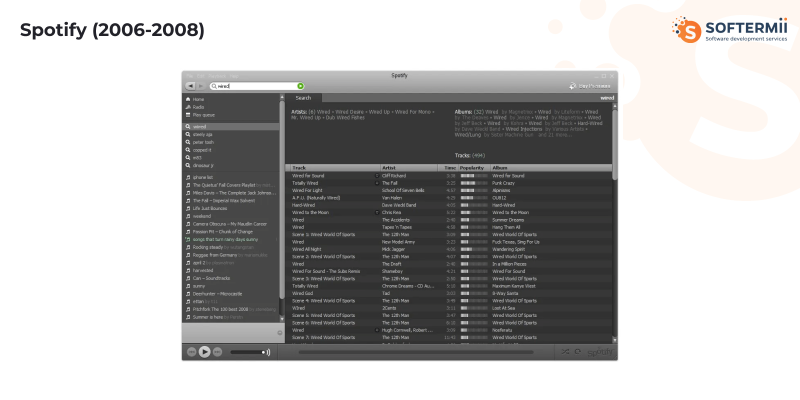

4. Spotify (2006-2008)

Initial Idea

Legal music streaming with zero

latency—play any song instantly, no downloads or buffering. Combat music

piracy by being better than free.

MVP Version

- Desktop app (Windows only) for internal testing

- 1,000-song catalog from indie labels willing to experiment

- Peer-to-peer technology borrowed from BitTorrent (founder Daniel Ek's previous company)

- Invite-only closed beta in Sweden

- Ad-supported free tier (revolutionary for legal music)

Cost/Time:

- Development cost: ~$1M (needed label deals + complex streaming tech)

- Time to beta: 18 months (mostly licensing negotiations)

- Beta users: 10,000 hand-picked Swedish users

- Key metric: 95% of songs played within 200ms (beat piracy experience)

Metrics Validated:

- ✓ Instant playback changed user behavior (no more downloads)

- ✓ Free tier converted pirates to legal listeners (70% stayed on free)

- ✓ Freemium model drove premium conversions (paid users grew 8% monthly)

- ✓ Social sharing features increased engagement 3x

- ✗ Mobile streaming required a complete rebuild (bandwidth challenges)

Key Lesson:

"Compete with free by being better, not cheaper." Spotify's

MVP wasn't about catalog size—Pirate Bay had more songs. It was about

experience. By making legal streaming faster than piracy,

they changed behavior. The MVP proved technical feasibility before spending

billions on licensing.

Technical Breakthrough:

P2P caching meant popular songs

were streamed from nearby users, not central servers. This made instant

playback possible at scale—the core value prop that justified the

18-month build time.

5. Instagram (2010)

Initial Idea

Photo-sharing app with filters to make

smartphone photos look professional. Originally part of a check-in app called

"Burbn."

MVP Version

- Stripped-down Burbn—removed all features except photo sharing

- 11 filters (built using open-source image processing)

- Square photo format (technical constraint became design feature)

- iOS only, no Android

- Built in 8 weeks by a 2-person team

Cost/Time:

- Development cost: ~$10,000 (hosting + founder time)

- Time to launch: 8 weeks from pivot decision

- Day 1 result: 25,000 signups, server crashed in 2 hours

- Week 1: 100,000 users

Metrics Validated:

- ✓ Filters drove sharing (85% of photos used filters)

- ✓ Mobile-first worked (no desktop version needed)

- ✓ Users wanted simple sharing, not complex social features

- ✓ Square format reduced decision fatigue (constraints = creativity)

- ✗ Video sharing is not essential initially (added 3 years later)

Key Lesson:

"Subtract to find your core." Instagram's MVP was Burbn

minus everything that wasn't photo sharing. Kevin Systrom and Mike

Krieger analyzed which Burbn features got used most, then deleted everything

else. The result: an app so focused it explained itself.

Constraint as Feature:

Square photos weren't an artistic

choice—they simplified server storage and made layouts predictable.

Users thought it was intentional design. This "happy accident" principle shows

how MVPs can turn technical limitations into brand identity.

6. Jasper AI (2021-2024)

Initial Idea

AI copywriting assistant for marketers who

need to produce blog posts, ads, and social content at scale.

MVP Version (2021):

- Wrapper around GPT-3 API with marketing-specific prompts

- 5 templates: blog post intros, product descriptions, ad copy, social posts, emails

- No custom models—just clever prompt engineering

- Sold via the founder's existing marketing audience

Cost/Time:

- Development cost: ~$15,000 (frontend + GPT-3 credits)

- Time to launch: 6 weeks

- Month 1: $30K MRR from 200 customers

- 12 months: $40M ARR

Metrics Validated:

- ✓ Marketers will pay $40-100/month for AI writing tools

- ✓ Template-based approach beats open-ended text box

- ✓ Output editing (not generation) is where users spend time

- ✓ Content quality matters less than content velocity for the target segment

Key Lesson:

"Find underserved API users." Jasper didn't build

models—they built distribution and UX for GPT-3. Their MVP validated

that marketers needed a "no-code interface for AI" more than they needed

better AI. This created an 18-month head start before OpenAI released ChatGPT.

2024 Evolution:

After ChatGPT commoditized basic

generation, Jasper pivoted to "brand voice" training and enterprise workflow

integrations—features their MVP usage data revealed were most valued.

7. Notion AI (2022-2024)

Initial Idea

Add AI writing and editing directly inside the Notion workspace—no

context switching to ChatGPT.

MVP Version (Late 2022):

- Single feature: "AI writing assistant" button in every Notion block

- 4 functions: continue writing, summarize, improve writing, translate

- Launched to existing Notion users only (30M+ accounts)

- Billed as $10/month add-on to existing subscriptions

Cost/Time:

- Development cost: ~$500K (integration into existing platform)

- Time to alpha: 4 months with a 20-person team

- Alpha testers: 10,000 existing power users

- Week 1 conversion: 12% of invited users subscribed

Metrics Validated:

- ✓ Context-aware AI (inside workspace) is worth premium vs. standalone tools

- ✓ Editing existing content > generating new content (60% of usage)

- ✓ "Continue writing" most-used feature (45% of AI interactions)

- ✗ Translation barely used—removed 6 months later

Key Lesson:

"Embed AI where users already work." Notion's MVP proved

switching costs matter—users paid $10/month to avoid copy-pasting into

ChatGPT. The embedded experience, not AI capability, was the moat. This

validated the "AI feature, not AI product" thesis.

Product Insight:

Notion tracked which blocks users

AI-edited vs. AI-generated from scratch. Result: 70% editing existing content.

This showed AI works best as an "enhancement" tool for knowledge workers, not

a replacement.

8. Perplexity AI (2022-2024)

Initial Idea

"Answer engine" that cites sources—AI search without Google's ad clutter or ChatGPT's hallucinations.

MVP Version (Late 2022):

- Simple text input → AI-generated answer with citations

- Used GPT-3.5 + web search API (Bing/Brave)

- No accounts, no login wall—just instant answers

- Mobile app launched the same week as web

Cost/Time:

- Development cost: ~$50,000 (LLM API + search API + hosting)

- Time to launch: 10 weeks

- Week 1: 50,000 queries from Product Hunt launch

- 6 months: 10M queries/month

Metrics Validated:

- ✓ Users preferred cited answers over raw ChatGPT responses (trust factor)

- ✓ "Research mode" (deeper dives) drove 40% of premium upgrades

- ✓ Mobile-first search behavior emerging (65% mobile traffic)

- ✓ Follow-up questions (conversation) kept users engaged 3x longer

Key Lesson:

"Solve AI's credibility problem." Perplexity's MVP wasn't

better AI—it was verifiable AI. Citations turned AI

from entertainment to a work tool. This single UX choice differentiated them

from ChatGPT and validated a $520M valuation by 2024.

Growth Mechanic:

No login required for first 5 searches—massive top-of-funnel. Conversion

to paid happened when users hit the limit during a research session

(high-intent moment). MVP validated this "freemium on usage, not time" model.

9. Hebbia AI (2023-2025)

Initial Idea

AI-powered document analysis for financial

analysts, lawyers, and consultants who read hundreds of PDFs daily.

MVP Version (Mid-2023):

- Upload PDFs → Ask questions → Get answers with exact page citations

- Custom RAG (retrieval-augmented generation) on user documents

- Started with 10-K filings (public financial documents) only

- Sold exclusively to investment analysts

Cost/Time:

- Development cost: ~$200,000 (custom RAG infrastructure + GPT-4)

- Time to alpha: 5 months

- First customer: Large hedge fund, $50K/year contract

- 12 months: $10M ARR with 50 enterprise customers

Metrics Validated:

- ✓ Analysts will pay $1K+/seat for vertical-specific AI

- ✓ Accuracy > speed for professional use cases (they chose accuracy every time)

- ✓ Multi-document reasoning (compare 10 PDFs) is a killer feature

- ✓ Private deployment option critical for financial customers

Key Lesson:

"Go vertical, not horizontal." Hebbia's MVP focused on one

use case (financial document analysis) vs. generic "AI for documents." This

allowed deep customization: understanding SEC filing structures, financial

terminology, and analyst workflows. Result: 10x higher pricing than horizontal

competitors.

Enterprise Insight:

MVP customers paid $50K not for

better AI, but for auditability—every answer traceable

to source paragraph. This "AI accountability" feature became their entire

sales pitch.

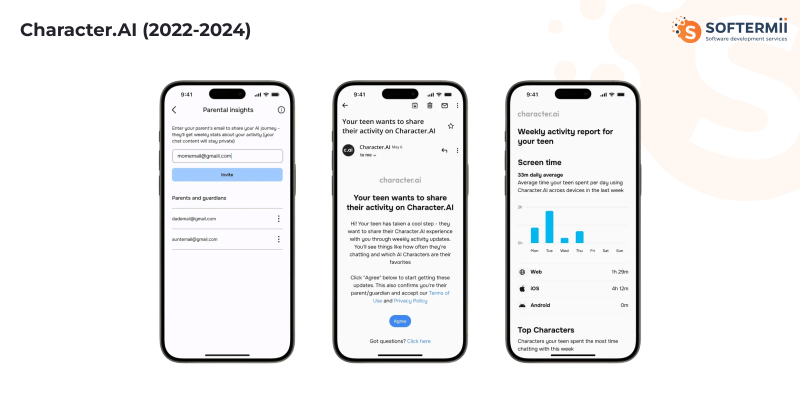

10. Character.AI (2022-2024)

Initial Idea

Talk to AI versions of historical figures,

fictional characters, or create custom AI personas.

MVP Version (Late 2022):

- 20 pre-built characters (Einstein, Shakespeare, Elon Musk, etc.)

- Simple chat interface—no bells and whistles

- Powered by a custom LLM trained on character-specific data

- Completely free, no account required initially

Cost/Time:

- Development cost: ~$2M (ex-Google Brain founders, custom model training)

- Time to launch: 6 months from founding

- Month 1: 1M users organically (no marketing)

- 6 months: 100M messages sent

Metrics Validated:

- ✓ Entertainment > utility drives consumer AI adoption (80% of usage was casual chat)

- ✓ User-created characters more popular than official ones (UGC model)

- ✓ Session length 25+ minutes (vs. <5 min for ChatGPT at the time)

- ✓ Teens/Gen Z primary audience—emotional connection to AI

Key Lesson:

"AI doesn't have to be productive." Character.AI proved

consumers want AI for fun, not just work. Their MVP validated emotional

connection as a moat—users formed relationships with characters,

creating retention ChatGPT couldn't match. This consumer behavior insight

drove $1B valuation.

Controversial Insight:

Users spent more time chatting

with AI personas than searching or writing. This suggested AI's biggest

consumer opportunity might be companionship, not

productivity—a thesis still playing out in 2026.

11. v0.dev by Vercel (2023-2024)

Initial Idea

Generate production-ready React components

from text descriptions—AI for frontend development.

MVP Version (Mid-2023):

- Text prompt → generates React + Tailwind CSS code

- Live preview of the component

- One-click copy to clipboard or deploy to Vercel

- Uses GPT-4 fine-tuned on React documentation + GitHub code

Cost/Time:

- Development cost: ~$300K (Vercel internal project)

- Time to alpha: 4 months

- Week 1 (invite-only): 50,000 components generated

- 3 months: Used in 1M+ Vercel deployments

Metrics Validated:

- ✓ Developers will use AI for UI scaffolding (70% of generated code kept as-is)

- ✓ Iteration speed matters—users regenerated 4-5 times to refine

- ✓ "Show me variants" feature drove 50% of usage (designers exploring options)

- ✗ Backend code generation is not reliable enough (remained frontend-only)

Key Lesson:

"AI for the boring parts." v0's MVP validated developers want

AI for repetitive UI work, not architecture decisions. This insight informed

the entire product roadmap—focus on boilerplate, not business logic.

Result: embedded into Vercel's platform, driving core product adoption.

Technical Validation:

Code quality metric: 68% of

generated components passed linting without edits. This "production-ready

threshold" proved AI could ship real code, not just prototypes.

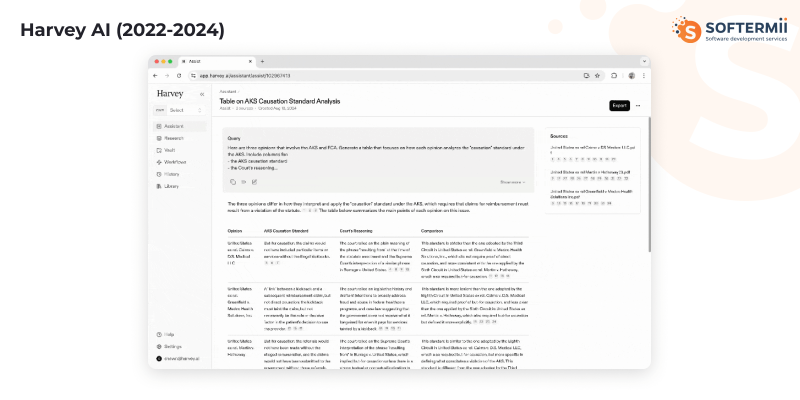

12. Harvey AI (2022-2024)

Initial Idea

Legal AI assistant trained on case law,

contracts, and legal workflows for law firms.

MVP Version (Early 2023):

- Contract analysis: Upload contract → AI highlights risks and non-standard clauses

- Started with M&A contracts only (narrow vertical)

- Sold to a single law firm (Allen & Overy) as a pilot

- Custom model trained on the firm's historical contracts

Cost/Time:

- Development cost: ~$500K (legal data licensing + model training)

- Time to pilot: 8 months (legal partnership + compliance review)

- Pilot result: 40% time savings on contract review

- 12 months: $30M Series B based on pilot success

Metrics Validated:

- ✓ Lawyers will use AI if accuracy exceeds 95% (trust threshold)

- ✓ Time savings > cost savings in law firm ROI calculations

- ✓ Junior associate work (contract review) is the automation sweet spot

- ✓ Partner-level attorneys want AI to suggest, not decide

Key Lesson:

"Regulated industries pay for certainty." Harvey's MVP

focused on provable accuracy metrics before feature breadth. They validated

every output with law firm partners, accepting slower iteration for higher

quality. This built trust, impossible for generic LLMs to achieve.

Sales Insight:

First customer paid $1M+ for pilot

because Harvey's founders (ex-OpenAI + lawyer) understood legal workflows.

Domain expertise, not just AI capability, closed the deal.

Common Patterns Across All MVPs

| Category | Key Idea | Description / Examples |

|---|---|---|

| Classic MVPs (2007–2010) | Solve the founder's personal problem | Airbnb, Uber, and Instagram started as solutions to the founders’ own problems |

| Launch to a constrained audience | Single city, one platform, very small early user groups | |

| Do things that don't scale | Manual work, founder involvement in operations | |

| One core feature obsessively polished | Instagram filters, Spotify’s speed and smoothness | |

| AI-Native MVPs (2022–2026) | Leverage existing models | Wrap GPT instead of building custom LLMs from zero |

| Validate the distribution before technology | Jasper, Notion AI focused on the audience and channels first | |

| Cite sources / show work | Perplexity, Hebbia emphasize transparency | |

| Go vertical immediately | Harvey, Hebbia specialize deeply vs. horizontal ChatGPT | |

| Universal Lessons | Speed to validation > completeness | MVPs launch simple, imperfect versions early |

| One metric matters | Dropbox (waitlist), Instagram (Day 1 signups), Jasper (MRR) | |

| Constraints breed creativity | Instagram square photos, Uber black-car-only start | |

| Users tell you what to build next | All cases are iterated based on real user behavior, not roadmaps | |

|

||

The throughline: MVPs succeed by proving one critical assumption cheaply and quickly, then using that proof to guide what's built next.

MVP Development by Industry

MVP development varies significantly across industries because each domain has its own regulatory constraints, technical dependencies, user expectations, and validation metrics. While the overall MVP philosophy remains consistent — build the smallest version that delivers real value — the implementation looks very different for healthcare, fintech, marketplaces, SaaS, and AI-native products.

Healthcare MVPs

Healthcare MVP development requires a strict focus on compliance, secure data flows, and clinical reliability from day one. Unlike general SaaS, healthcare products face regulatory and integration constraints that affect architecture, timelines, and scope.

HIPAA/GDPR compliance

Healthcare MVPs must include

privacy-by-design principles: encrypted data storage, role-based access

control, audit logs, and minimal PHI handling. Early compliance workshops and

documented data flows are essential to avoid costly rework later.

EHR integration

Electronic Health Record (EHR)

integrations (Epic, Cerner, FHIR APIs) can significantly impact MVP timelines.

Start with a limited “read-only” integration or a sandbox

environment to validate core workflows without full clinical connectivity.

AI diagnostics workflow risk

If your MVP includes

AI-assisted diagnostics, you must design explainability, human-in-the-loop

review, and clear clinical boundaries. Early risk assessment is crucial since

diagnostic features may trigger regulatory pathways (FDA/CE, depending on

region).

Fintech MVPs

Fintech MVPs must prioritize trust, security, and compliance. Building minimal functionality doesn’t mean compromising the regulatory foundation.

KYC/AML

Identity verification, anti-money-laundering

checks, and transaction monitoring are baseline requirements. Most fintech

MVPs integrate 3rd-party providers (e.g., Persona, Onfido, SumSub) to

accelerate launch and maintain compliance.

Open Banking APIs

Modern fintech products rely on Open

Banking / PSD2 APIs for account access, payments, and financial data. Start

with one region or one provider (Plaid, Tink, Truelayer) before expanding, and

plan for sandbox testing environments in your MVP timeline.

Fraud detection AI

Machine-learning–based fraud

scoring can significantly improve user safety, but should start simple:

rules-based checks, basic anomaly detection, and clear escalation flows. Add

automated ML pipelines only after gaining enough transactional data.

Marketplace MVPs

Marketplace MVP success hinges on balancing the supply and demand sides quickly enough to prove liquidity.

Supply vs. demand seeding

For early traction, seed

either supply or demand manually instead of expecting organic growth. Many

marketplace MVPs start with a localized niche (one city or vertical) to build

density before scaling horizontally.

Instant liquidity tactics

Use concierge onboarding,

verified profiles, subsidized supply, or temporary “manual

matching” to ensure users immediately find what they need. Liquidity is

the #1 validation metric for marketplace MVPs in 2026.

SaaS MVPs

SaaS MVPs must validate recurring value, not just one-time usage. Architecture and monetization decisions made early affect long-term scalability.

Multi-tenant architecture

A lightweight multi-tenant

setup (shared DB with tenant isolation, or schema-per-tenant) enables fast

onboarding and lower operational cost. This is essential for B2B SaaS that

expects multiple customer accounts from day one.

Usage-based billing

2026 SaaS trends favor billing

models tied to usage, API calls, seats, or compute. Integrate early with

providers like Stripe Billing or Paddle to accurately track usage events and

reduce billing friction at launch.

AI MVPs

AI MVPs differ fundamentally from traditional software products—they require data pipelines, model orchestration, and predictable inference behavior from the start.

Model selection

Choose between hosted LLM APIs (GPT-5,

Claude, Llama, Mistral), fine-tuned models, or self-hosted options based on

performance, cost, and data sensitivity. Start with off-the-shelf APIs, then

optimize once you validate market need.

Data pipelines

AI MVPs must collect clean data early.

Set up ingestion, normalization, embedding storage, and monitoring workflows.

Small MVP datasets can be enriched using synthetic data generation or transfer

learning to improve early model performance.

Prompt engineering infra

Build a prompt-layer system:

versioning, templates, evaluation metrics, caching, vector search, and safety

filters. This ensures output consistency and reduces inference costs as your

MVP scales.

How to Choose an MVP Development Partner in 2026

The best partners combine product thinking, engineering capability, and domain expertise. They should guide you through discovery, help refine your value proposition, build a scalable technical foundation, and support post-launch iteration — all while maintaining transparency and ownership integrity.

Below is the essential checklist for evaluating MVP vendors in 2026:

Technical depth

Your partner must demonstrate mastery of modern stacks (React, Flutter, Node.js, Python) and cloud ecosystems (AWS, GCP, Azure). They should be comfortable with API-first architecture, modular systems, and production-grade DevOps from day one.

AI capabilities

Given 2026 development norms, your MVP partner must understand LLM integration, vector databases, prompt engineering, model selection, AI cost optimization, and guardrail implementation. They should be able to design AI features and measure their impact.

Discovery maturity

Strong discovery prevents overscoping. Look for structured processes: user interview synthesis, AI-assisted competitor analysis, hypothesis mapping, RICE/MoSCoW prioritization, and clear success metrics before any coding starts.

Transparent pricing

Your partner should provide line-item estimates for design, frontend, backend, AI modules, DevOps, QA, PM, and maintenance — including model inference costs. Transparency ensures predictable budgets and avoids surprise expenses.

Compliance expertise

For sectors like healthcare or fintech, choose a partner experienced with HIPAA, GDPR, KYC/AML, PCI-DSS, and data privacy engineering. Compliance baked into the MVP architecture avoids expensive retrofits and launch delays.

Post-MVP scaling support

The partner should help you prepare for growth: refactoring plans, cost optimization, model monitoring, analytics-based iteration, and support for new features after launch. MVPs are only the first step — future scalability matters.

Code ownership guarantees

Ensure you retain full intellectual property rights. Your vendor should deliver clean, documented, standards-aligned code, with access to repos, infrastructure, and CI/CD pipelines. Avoid partners who restrict access or maintain proprietary lock-in.

Why Choose Softermii

Softermii stands out as an MVP development partner by combining proven methodology, AI-driven acceleration, and deep product discovery expertise. Our approach is built around validated learning, lean delivery, transparent communication, and long-term scalability — ensuring your product moves from idea to market with clarity and confidence.

Our battle-tested product team has delivered MVPs across healthcare, fintech, real estate, marketplaces, and AI-first products. This cross-domain experience allows us to anticipate industry challenges early, design compliant architectures from day one, and reduce risk through informed technical decisions. Whether integrating HIPAA-ready workflows, embedding AI copilots, or architecting a multi-tenant SaaS system, our team brings years of specialized experience to each project.

We emphasize stability, transparency, and security in every engagement. That means crystal-clear pricing, predictable sprint planning, full code ownership for clients, and secure development practices aligned with GDPR, SOC2-ready standards, and modern cloud infrastructures. Case studies like VidRTC (a high-performance real-time communication platform) and Locum App (a healthcare staffing solution) demonstrate our ability to deliver complex MVPs with measurable business results.

Conclusion

Building an MVP in 2026 requires more than coding — it demands a strategic partner who understands AI-led product development, modern user expectations, and the realities of high-speed validation. Softermii helps founders, CTOs, and product leaders move from uncertainty to clarity with a proven, repeatable process grounded in discovery, data, and rapid iteration.

If you’re exploring an idea or planning an early-stage product, our team can help you assess feasibility, define scope, and estimate budget with confidence. Let’s map your vision and build a market-ready MVP the right way.

Book your MVP scoping call — get a free consultation and a tailored technical feasibility session to jumpstart your product journey.

Frequently Asked Questions About MVP Development in 2026

1. How much does it cost to build an MVP in 2026?

MVP costs range from $30,000 to $300,000+, depending on complexity. Simple apps cost $30,000-$55,000 (5-8 weeks), standard SaaS MVPs run $55,000-$140,000 (8-14 weeks), and AI-powered MVPs cost $140,000-$300,000+ (3-6 months). Costs vary by team location: US developers charge $100-$200/hour while Eastern European teams charge $40-$80/hour for similar quality.

2. How long does MVP development take in 2026?

Simple MVPs take 5-8 weeks, standard SaaS products need 8-14 weeks, and complex AI-powered MVPs require 3-6 months. Thanks to AI coding assistants, development is now 40-50% faster than traditional methods. The timeline includes discovery (1-3 weeks), design (1-3 weeks), development (3-16 weeks), and testing (1-2 weeks).

3. What's the difference between an MVP and a prototype?

An MVP is a real, working product that customers can use and pay for. A prototype is just a visual mockup showing how it would look, but doesn't actually function. Prototypes cost $5,000-$25,000 (1-4 weeks) and help pitch investors. MVPs cost $30,000-$300,000+ (5-24 weeks) and prove people will actually use your product.

4. Should I hire a local team or outsource MVP development?

It depends on your budget and project complexity. US/UK teams ($80-$200/hour) offer easy communication but cost 2-4x more. Eastern European teams ($40-$80/hour) provide the best value with strong skills and good English. Asian teams ($20-$50/hour) are the cheapest but may have communication challenges. For complex projects, choose local teams. For straightforward MVPs, offshore works well.

How about to rate this article?

0 ratings • Avg 0 / 5

Written by: